Synthetic Generation of Local Minima and Saddle Points for Neural Networks

Abstract

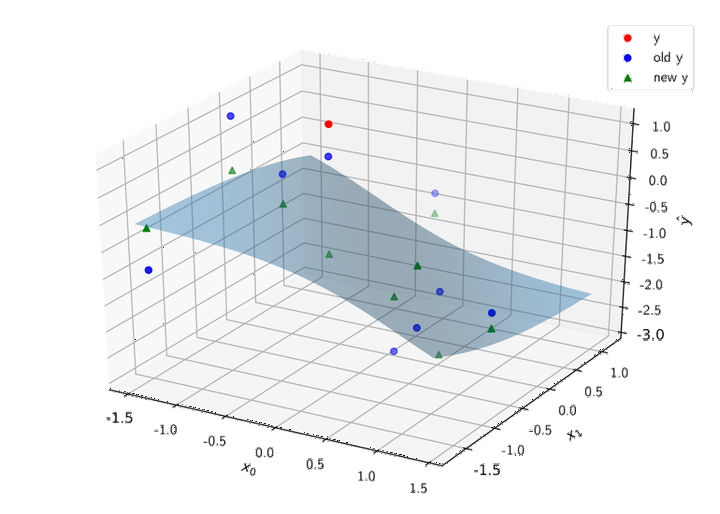

In this work-in-progress paper, we study the landscape of the empirical loss function of a feed-forward neural network, from the perspective of the existence and nature of its critical points, with respect to the weights of the network, for synthetically-generated datasets. Our analysis provides a simple method to achieve an exact construction of one or more critical points of the network, by controlling the dependent variables in the training set for a regression problem. Our approach can be easily extended to control the entries of the Hessian of the loss function allowing to determine the nature of the critical points. This implies that, by modifying the dependent variables for a sufficiently large number of points in the training set, an arbitrarily complex landscape for the empirical risk can be generated, by creating arbitrary critical points. The approach we describe in the paper not only can be adopted to generate synthetic datasets useful to evaluate the performance of different training algorithms in the presence of arbitrary landscapes, but more importantly it can help to achieve a better understanding of the mechanisms that allow the efficient optimization of highly non-convex functions during the training of deep neural networks. Our analysis, which is presented here in the context of deep learning, can be extended to the more general case of the study of the landscape associated to other quadratic loss functions for nonlinear regression problems.

Principled Approaches to Deep Learning, ICML 2017, Sydney, Australia August 10, 2017